Spring is right around the corner and the sun is shining upon us again 🙂 This winter has been put to very good use by yours truly. I’ve learned to hear again!

Spring is right around the corner and the sun is shining upon us again 🙂 This winter has been put to very good use by yours truly. I’ve learned to hear again!

I consolidated with my fabulous girlfriend, and we have purchased an apartment together :-)

I got a better relationship with my son, being able to really TALK with him. (and bringing the right responses, now that I’m not always tired out of my skull).

And I have taken up cross-country skiing again. It was more than 15 years since the last time I roamed the ski tracks in the Norwegian woods 🙂

6 month re-map milestone

I had a re-map a week back. That was my 6 month appointment. This time we increased the volume of all frequencies again. In addition to that, I have now one program with increased Dynamic range (70%) and one program with decreased Dynamic range (50%).

Dynamic Range is the range of frequencies that are accepted into the implant by the microphone and processor. 50% means that 25% of the frequencies are not used in either side of the frequency range. (meaning both very deep bass and very high pitch is reduced, keeping only the sounds in the middle.)

I had 60% the last few months. I use 70% mainly, find it much more pleasant to hear as much as possible. But it is tiring too, it takes time for the brain to develop noise filter skills. So the 50% program is used when I am tired and need to shield myself from some of the ambient noises around me.

Ambivalent regarding current sound quality

Ambivalent regarding current sound quality

I am not happy about the quality of sound… After this mapping it feels like I took one step back in terms of speech comprehension. When I switch back to my previous program (yes I kept that one, think it was a good move in terms of motivating myself) it just sounds really, really bad. So the new adjustments are definitely a step in the right direction, but it seems like every time re-mapping frequencies are done, my brain needs a new time of adjustment… Tiring, somewhat de-motivating and a little bit frustrating. But these issues are, by all means, peanuts compared to what lies behind me at this point. I am simply super-impatient. 🙂

The speech of others are super crisp, sharp. It is hard to get used to, after decades of dull, watery “cottonish” mumble-jumble. It is kind of like touching an area of skin that has been burnt; very sensitive, painful and unavoidable. The nerves that receives the electronic impulses from my implant feels raw and exposed.

Tinnitus and stress management

I’ve come a long way with my tinnitus management self-study course. I’ve learned to control the level of stress in me, both psychologically and physically. Yes, the stress is there. the stressors will never go away. BUT, I can better control my own stress-reactions, and I can get rid of the worst tension, and hence tiredness, irritation and other related symptoms are lessened.

The self-study course works like this: (very boiled down)

First you learn to relax muscles “manually”. When you have relaxed many, many times, you learn to do it quicker and easier. It’s like learning anything else. At first it’s a little hard and awkward, but when exercising and repeating enough, the results start appearing.

First you learn to relax muscles “manually”. When you have relaxed many, many times, you learn to do it quicker and easier. It’s like learning anything else. At first it’s a little hard and awkward, but when exercising and repeating enough, the results start appearing.

The key-words are muscle awareness and relaxing, then breathing technique. Breathing while stressed is short, shallow and chest originated, whilst breathing from stomach forces and enables for longer and deeper breaths, thus tricking your body into believing you’re not stressed. When relaxed all our breathing is originated from our abdominal region, our chest does not move much.

The concept is quite ingenious: when you and your body start to remember how to get to the relaxed state, you can embed some memory techniques to invoke that memory really fast (ie. a code word while breathing out slowly). By bringing that memory to the front of your consciousness, the pre-programmed and previously learned and experienced relaxation kicks in.

Personal gain and experiences

These days I am much more conscious about my level of stress. Every time I drive my car, I notice the stress coming (traffic is full of stressors). I use the time while driving in the car for training to get my stress down.

I get stressed when playing an online-game, I train for getting rid of the stress then as well.

I get stressed when playing an online-game, I train for getting rid of the stress then as well.

I feel like I’m getting better, psychosomatic. Less pains, more rested, I am healing faster (I dislocated my shoulder, and a week later I am almost without pains!) Last time I dislocated my shoulder, I needed physical therapy and did months of training to get painless again. (of course the damage was much more severe back then, but a dislocated shoulder is still a major pain :-) )

I feel like I can endure more, but I am not sure if that is due to my stress management training alone. I guess it’s part that, part CI sound improving, part more daylight, sunshine and warmer temperatures 🙂

Things are improving, I’m penetrating the dark clouds slowly and surely, the blue sky is closer than ever 🙂

linked, as the word “expectation” was just linked.. That way we will be on the “same page”.

linked, as the word “expectation” was just linked.. That way we will be on the “same page”.

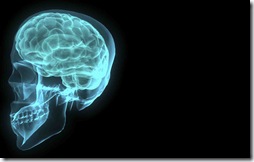

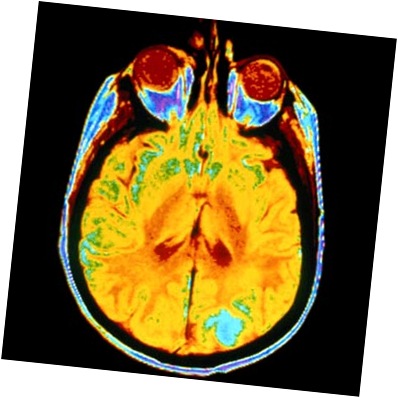

Well, obviously my brain has some work to do! The rewiring of the neural pathways are one thing, and the brains processing are another. I believe we can agree that the neural rewiring both in our nervous system, and in our brains (which I agree, is in fact part of our nervous system) is about new synaptic paths forming, adjusting our nervous system to the new sensory reality.

Well, obviously my brain has some work to do! The rewiring of the neural pathways are one thing, and the brains processing are another. I believe we can agree that the neural rewiring both in our nervous system, and in our brains (which I agree, is in fact part of our nervous system) is about new synaptic paths forming, adjusting our nervous system to the new sensory reality. I will forget the old information, and fill it up with the new. All as the “Borgs” in Star Trek says: “You will be assimilated.”

I will forget the old information, and fill it up with the new. All as the “Borgs” in Star Trek says: “You will be assimilated.”